Data & Process

Data

The training data is provided in a .jsonl (JSON Lines) format, which is a series of JSON records broken by newline.Process

To generate reddit comments, we first needed a lot of reddit data. We tried a number of different methods of scraping r/AmITheAsshole, none of which provided sufficient data on their own, so the downloads we provided is an aggregated set that we used for training.

We started with the following queries:

- Top 1000 AITA posts all time

- All posts on AITA filitered

- All 50+ upvote posts on AITA from 2020

The first two did not provide enough data, especially after filtering, so we focused on the latter for our training set. Scraping all of r/AmITheAsshole's posts from 2020 gave us an ungodly amount of data. Hundreds of thousands of rows and almost a gigabyte of data -- and that's all pure text! This was way too big to work with, so we set two initial guidelines:

- Posts had to be less than 2000 characters

- Posts had to have more than 50 upvotes

Reddit's API only provides the first 1000 results for your search and does not let you paginate. The majority of our scraping was done with tools like PRAW and PSAW, two API wrappers built on top of pushshift.io, a website and database which logs of all of the posts that go on Reddit when they get posted. After filtering out the posts with fewer than 2000 characters, we were left with a much more reasonable... 101,000 posts, weighing in at 141mb.

Even though Pushshift provides a much larger dataset, it onlys log the posts when they goes up, so it can't provide dynamic information like the number of upvotes the post receieved and their best comments. By filtering the larger dataset, we queried Reddit for the AITA submissions that fit our length criteria, and got their upvote count and top comments. The training data was generated by these three steps:

- Check if post has 50 upvotes

- If it has more than 50 upvotes, get the top 4 comments from

- Log each post/comment pair as a line in a new JSON file

We started with the first 33,000 and with the resulting post/comment pairs we filtered out any rows that did not start with "YTA", "NTA", "NAH", or "ESH"—comments that people leave to indicate their summary judgement of the poster. The resulting ~7,000 rows of data comprise the "Neutral" dataset.

Then we ran into a problem, we could take a subset of the Neutral dataset by only selecting it's "NTA" rows to make our NTA dataset, but it turns out people on r/AmITheAsshole tend to think the posters are in the right much more often than they are in the wrong. We had way too few "YTA" post/comment pairs for our judgemental YTA model to work. We needed more data, so we took another chunk.

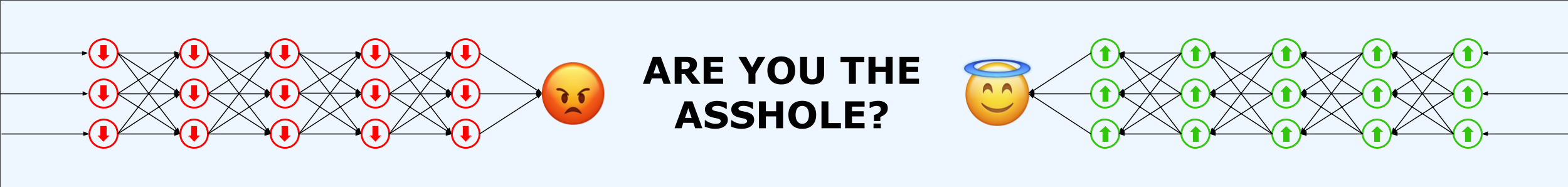

Those next 33,000 that we ran turned out to be enough to give us all the lucid tongue-lashings we needed for the YTA dataset. With both the biases prepared, we fed those two datasets (which you can download above) into the AI and got the models you see in red and green on the site today.